Lu Sang

PhD student at the Technical University of Munich

“In mathematics, you don’t understand things. You just get used to them.” -John von Neumann

I am a PhD student at the Computer Vision Group at TU Munich under the guidance of Prof. Cremers. Before that, I received Master degree in Mathematics from TU Munich and Bachelor degree in applied mathematics from Tongji University.

I am passionate about applying elegant mathematical theories to address practical, real-world challenges during my research. My research interests include 3D geometry representation, photometric stereo and BRDF modeling, 3D & 4D reconstruction.

🆕 News

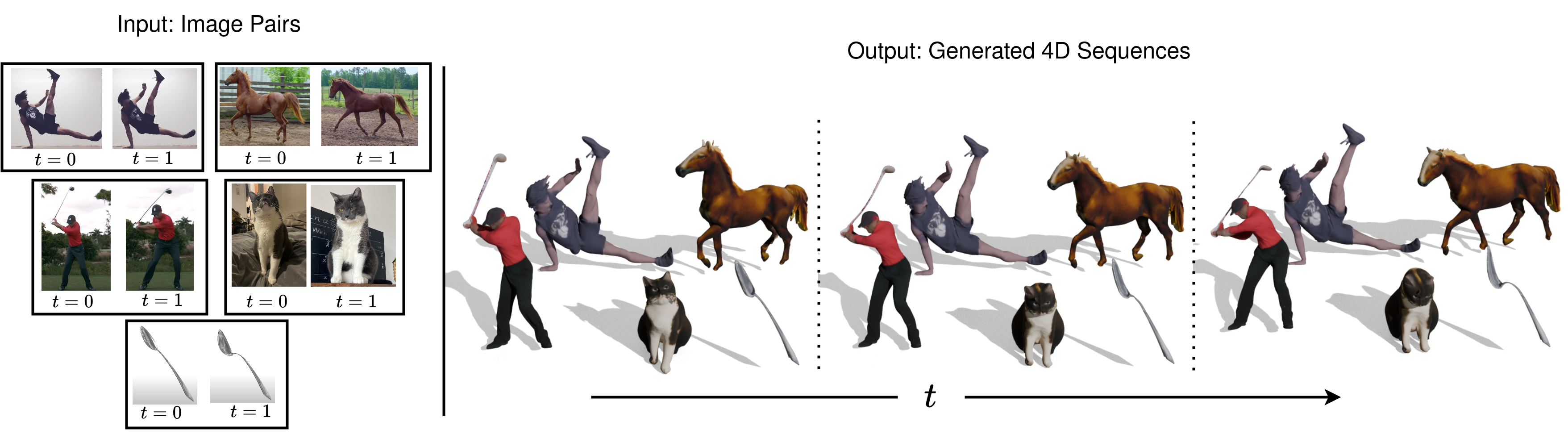

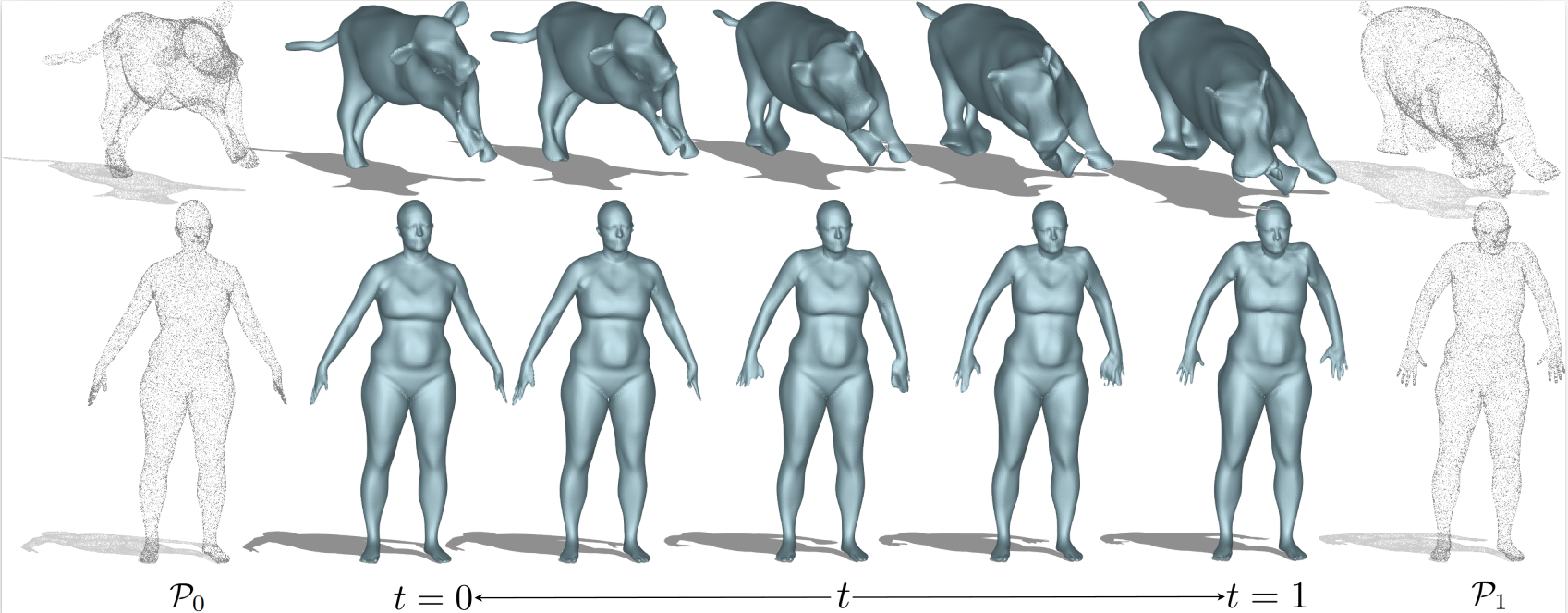

| Nov 11, 2025 | Our paper TwoSquared:4D Reconstruction from 2D Image Pairs is accepted by 3DV 2026 as oral. |

|---|---|

| Sep 16, 2025 | I started my internship at Google Zurich. |

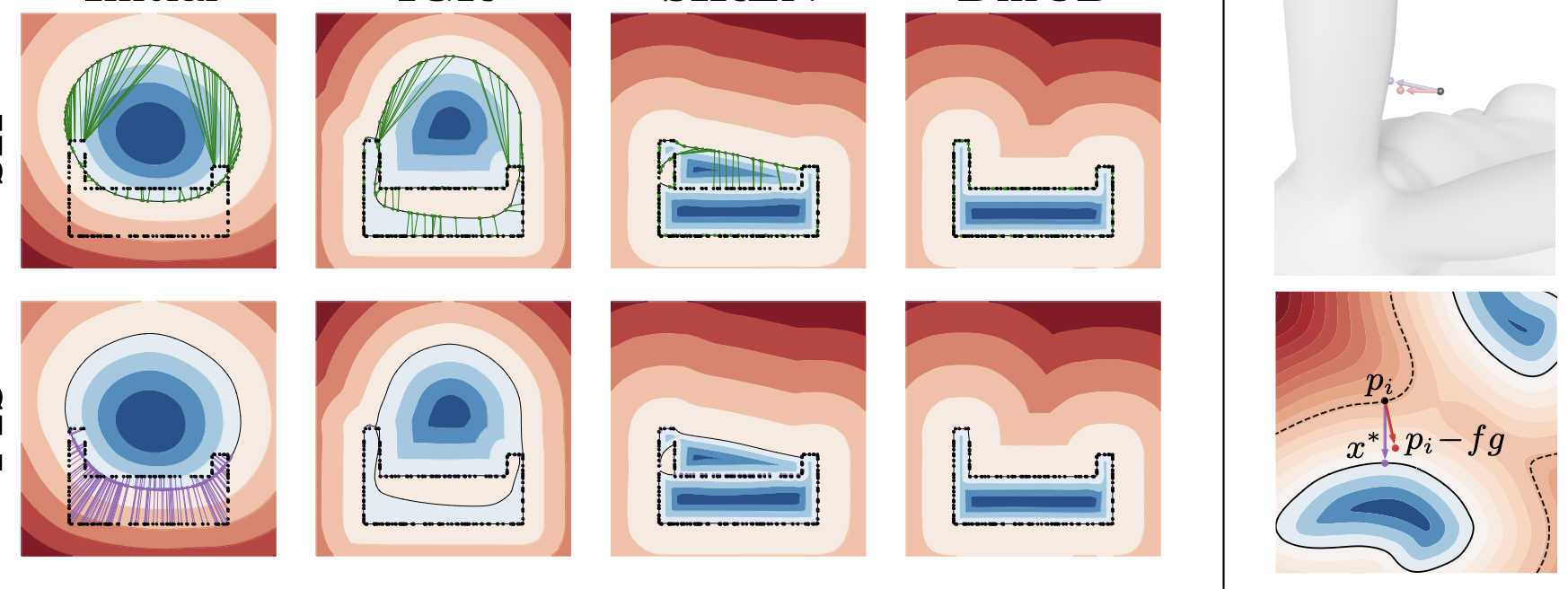

| Jul 01, 2025 | One paper was accepted to SGP! |

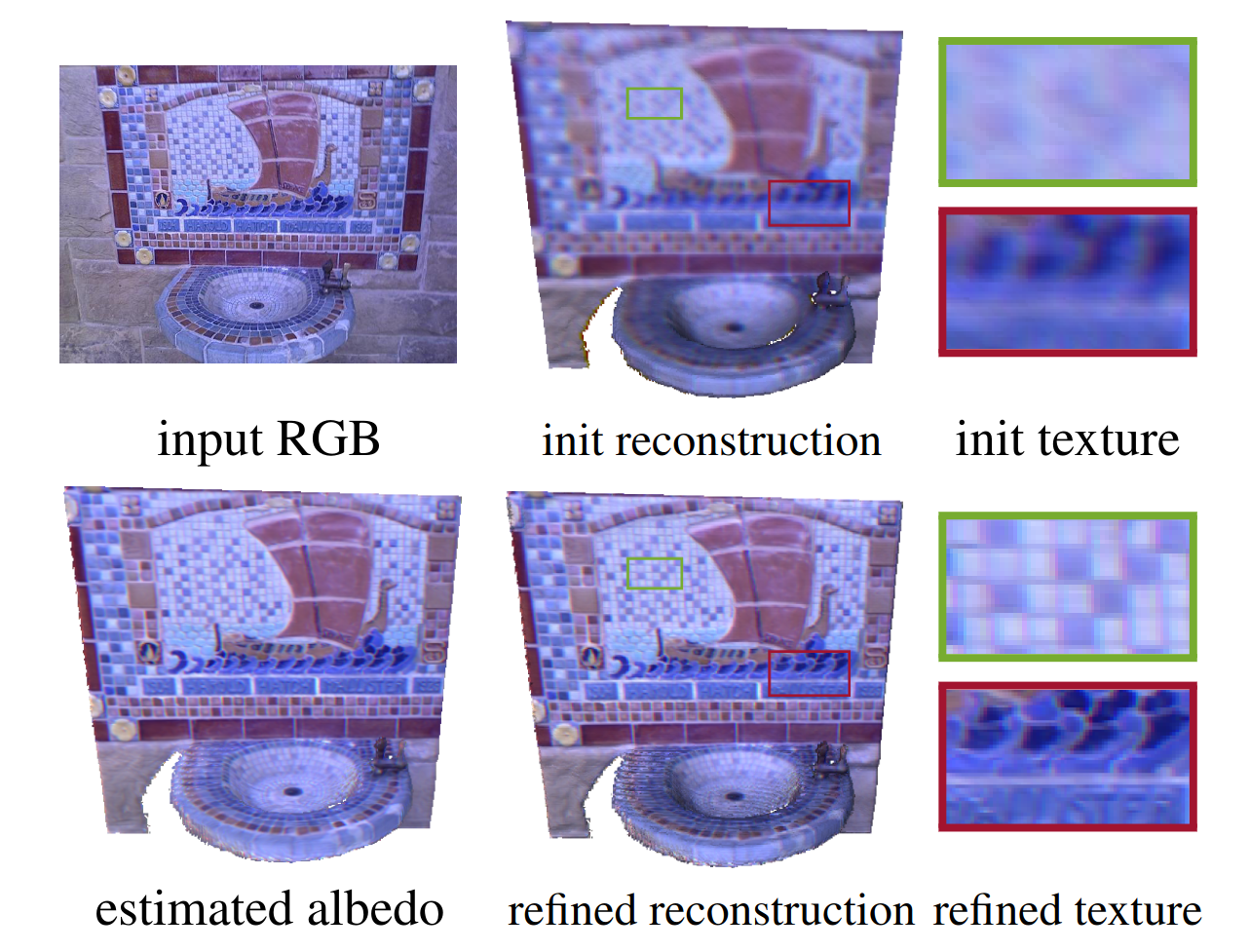

| Feb 28, 2025 | One paper accepted at CVPR 2025! |

| Jan 23, 2025 | One paper accepted at ICLR 2025! |